11 must-know Python Pandas tips and tricks for data scientists

I use them on a daily basis

Table of contents

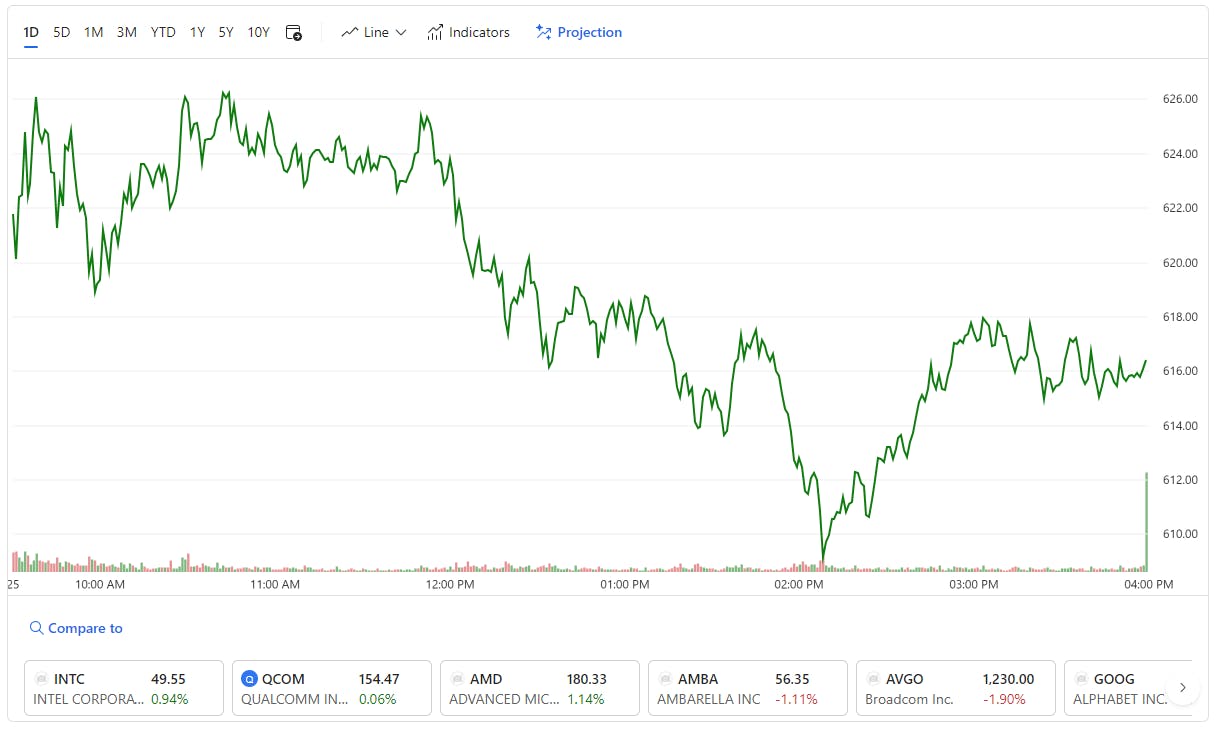

- Pull the NVDA exchange pricing data

- Number 11: Apply aggregations on DataFrame and Series

- Number 10: All you need is pandas_profiling

- Number 9: Easy way to convert data toint type

- Number 8: Break up strings in multiple columns

- Number 7: .str

- Number 6: Concatenate strings

- Number 5: Percentage of missing data

- Number 4: Highly correlated columns

- Number 3: Apply and lambda functions

- Number 2: drop_duplicates vs unique

- Number 1: Ufuncs

- Wrap-Up

You can have data without information, but you cannot have information without data.

Daniel Keys Moran, science fiction writer

Pandas is considered the most widely used tool for data manipulation, filtering, and wrangling. It comes with enormous features and functionalities designed for fast and easy data analytics. As a data scientist, I use its features on a daily basis, and in this post, I’d love to share with you some of the tricks of pandas.

I hope that this crafted list of features serves as a quick reference and gives you a good starting point for learning Pandas. The listed features come in no specific order, so no conclusions can be made on the order.

I will be using the NVDA ticker price data set for this post. So before going into the feature lists, I’ll share with you a few lines of code to download the data locally.

Pull the NVDA exchange pricing data

We will be using Yahoo! Finance's API to download some market data. For that, we will be using the yfinance Python package. Follow this link and install yfinance. I installed it using the following single line of code:

!pip install yfinance

import os

import numpy as np

import pandas as pd

import pickle

import yfinance as yf

Let's first pull the historical NVDA exchange rate from the Yahoo! Finance's API.

def get_yf_data(ticker, period = "1mo"):

'''Pull and cache yfinance data into a dataframe. Caching is important for avoiding rate limits.'''

pkl_file_name = ticker.replace(" ", "_") + "_" + period

cache_path = '{}.pkl'.format(pkl_file_name).replace('/','-')

try:

# Check if the pickle file is in the working directory.

f = open(cache_path, 'rb')

ticker_df = pickle.load(f)

print('Loaded {} from cache'.format(ticker))

except (OSError, IOError) as e:

# The data is not locally avaiable. Download it and store it in a form of pickle file.

print('Downloading {} from yfinance'.format(ticker))

ticker_df = yf.download(ticker, period=period)

ticker_df.to_pickle(cache_path)

print('Cached {} at {}'.format(ticker, cache_path))

return ticker_df

# Pull NVDA and AAPL price from exchange data

NVDA_price= get_yf_data("NVDA", period="10d")

# If you would like to download more tickers you try the following:

NVDA_price= get_yf_data("NVDA APP TSLA", period="1mo")

# The above will download the Nvidia, Apple and Tesla share prices.

NVDA_price.tail()

Now that we have the data ready at hand, let's have a look at my selection of Pandas tips and tricks.

Number 11: Apply aggregations on DataFrame and Series

NVDA_price.agg(['mean', 'min', 'max'])

NVDA_price.High.agg(['mean', 'min', 'max'])

.describe() provides the same functionality but .agg() is more flexible as far as I can tell.

NVDA_price.describe()

Number 10: All you need is pandas_profiling

pandas_profiling is a Python library mainly used for initial data exploration. A running example:

# Make sure that you have the pandas_profiling installed.

# To install pandas_profiling run: !pip install pandas_profiling

import pandas_profiling

NVDA_price.shape

# Chart the NVDA pricing data

pandas_profiling.ProfileReport(NVDA_price)

This helps you with a few lines of code and time-saving tricks when exploring the data. The pandas_profiling library comes with more features than the one listed above. Interested readers are referred to the official website.

The package provides an extensive exploratory data analysis (EDA) that includes reporting on different types of correlations, such as Pearson's r and Phik coefficient (φk). Phik (φk) is a new correlation coefficient that works consistently between categorical, ordinal, and interval variables. Phik can capture non-linear dependency and revert to the Pearson correlation coefficient in the case of the bivariate normal input distribution. Read more here.

Number 9: Easy way to convert data toint type

This is a simple but useful trick! When converting the data into int type, the most common approach is to use .astype('int'). However, if there are strings or other invalid types, you will face an error, and the conversion will fail. An alternative is to use the pd.to_numeric() command which will handle the errors. You may try this for yourself.

Number 8: Break up strings in multiple columns

A simple trick for splitting a column into multiple columns using a split function:

NVDA_price['column_name'] = NVDA_price['column_name'].str.split(" ", expand=True)

Number 7: .str

I’d like to show you how awesome .str is with Pandas Series. Check the following piece of code:

# Covert the Data column to str.

NVDA_price['Date_str'] = NVDA_price.index.astype(str)

# And take the first 4 elements of the resulting string.

NVDA_price['Year_str'] = NVDA_price['Date_str'].str[:4]

It's awesome how they make this bit of magic work.

Number 6: Concatenate strings

It’s so easy with the pandas to create a new column by combining two existing columns:

NVDA_price['ts'] = NVDA_price.index.values.astype(np.int64) // 10 ** 9

NVDA_price['price_ts'] = NVDA_price["Open"].map(str) + " - " + NVDA_price["ts"].map(str)

NVDA_price['price_ts'].head()

and to get them back again:

NVDA_price['price'], NVDA_price['ts'] = zip(*NVDA_price.price_ts.str.split(' - ', 1))

NVDA_price[['price_ts', 'price', 'ts']].head()

Number 5: Percentage of missing data

To get the percentage of missing data in columns and print them in descending order:

# gives % of missing data in columns!

(NVDA_price.isnull().sum()/NVDA_price.isnull().count()).sort_values(ascending=False)

Number 4: Highly correlated columns

Let’s assume that we want to build a predictive model and we would like to know which columns are highly correlated (bigger than 0.10) to the column Volume!

corr_coeff = NVDA_price.corr()['Volume'].abs().sort_values()

corr_coeff[corr_coeff > 0.10]

Number 3: Apply and lambda functions

One of the most awesome things that you can do with pandas is that you can store objects such as networkx graphs in them. Then you can easily .apply() the metrics and algorithms on each graph and see the tabulated results in a nice pandas dataframe. You can also easily .apply() custom functions or lambdas to modify all objects at once. The index then can be used to keep track of everything and to keep results near their original objects.

In the example let’s assume that you have multiple networks from different years. We will make a graph at random.

import networkx as nx

import itertools

import random

years, graphs = list(zip(*[(year, nx.barbell_graph(random.randint(2,10),random.randint(2,50))) for year in range(2010,2019)]))

df = pd.DataFrame({"graph": graphs}, index=years)

df["number_of_nodes"] = df.graph.apply(nx.number_of_nodes)

df["number_of_edges"] = df.graph.apply(nx.number_of_edges)

df["transitivity"] = df.graph.apply(nx.transitivity)

def nodes_odd_degree(g):

# Calculate list of nodes with odd degree

nodes_odd_degree = [v for v, d in g.degree() if d % 2 == 1]

return nodes_odd_degree

def len_odd_node_pairs(g):

# Compute all pairs of odd nodes and return the length of list of tuples!

odd_node_pairs = list(itertools.combinations(nodes_odd_degree(g), 2))

return len(odd_node_pairs)

df["nodes_odd_degree"] = df.graph.apply(nodes_odd_degree)

df["len_nodes_odd_degree"] = df["nodes_odd_degree"].apply(len)

df["len_odd_node_pairs"] = df.graph.apply(len_odd_node_pairs)

# And now print a selection of columns:

df[["number_of_nodes", "number_of_edges", "transitivity", "nodes_odd_degree", "len_nodes_odd_degree", "len_odd_node_pairs"]]

Number 2: drop_duplicates vs unique

Let’s assume that you have a column with millions of entries and you need to find the unique set of values It. df.column_name.drop_duplicates(keep="first", inplace=False) is a lot faster than df.column_name.unique(). Can be tested using %timeit.

The keep="first" option drops all the duplicates except for the first occurrence.

Number 1: Ufuncs

As explained here Pandas Ufuncs is much better than .apply command.

Wrap-Up

This post should give you an idea of some Pandas tricks commonly used by data scientists. The list aims to make your code more efficient.

In my next post, I'll share some basic concepts you need for working with data in PySpark. The post will cover topics such as reading and writing data from CSV files, along with some basic statistical analysis and visualization of data.

Notes:

1 - The official pandas cheat sheet can be downloaded from here.

2- Link to the notebook of this tutorial.

Refernces:

1 - Data School

2 - Lukas Erhard

Please leave a message below if you would like to share your thoughts or if you like to share your tricks on Pandas.